Advanced Lesson 2: Performance Optimization

Learning Objectives

By the end of this lesson, you will be able to:

- Identify performance bottlenecks in Manus AI implementations

- Apply optimization techniques for different components of Manus AI systems

- Implement monitoring and benchmarking strategies

- Design scalable solutions for high-demand scenarios

Performance Analysis and Benchmarking

Key Performance Metrics

Understanding and measuring the performance of Manus AI systems:

- Response Time: Time taken to complete a request

- Throughput: Number of requests processed per unit of time

- Resource Utilization: CPU, memory, network, and storage usage

- Error Rate: Frequency of failures or incorrect responses

- Latency: Time delay between request and response

Performance Metrics Framework:

| Metric | Description | Target Range | Measurement Method |

|---|---|---|---|

| Response Time (P95) | 95th percentile of time to complete requests | < 2000ms | Application logs, APM tools |

| Throughput | Requests processed per second | > 100 RPS | Load testing, monitoring tools |

| CPU Utilization | Percentage of CPU capacity used | 60-80% | System monitoring |

| Memory Usage | Percentage of available memory used | 70-85% | System monitoring |

| Error Rate | Percentage of requests resulting in errors | < 0.1% | Application logs, error tracking |

Benchmarking Methodologies

Approaches for establishing performance baselines and comparisons:

- Load Testing: Simulating expected usage patterns to measure performance

- Stress Testing: Pushing the system beyond normal limits to identify breaking points

- Comparative Analysis: Comparing different configurations or implementations

- A/B Testing: Testing specific changes against a control version

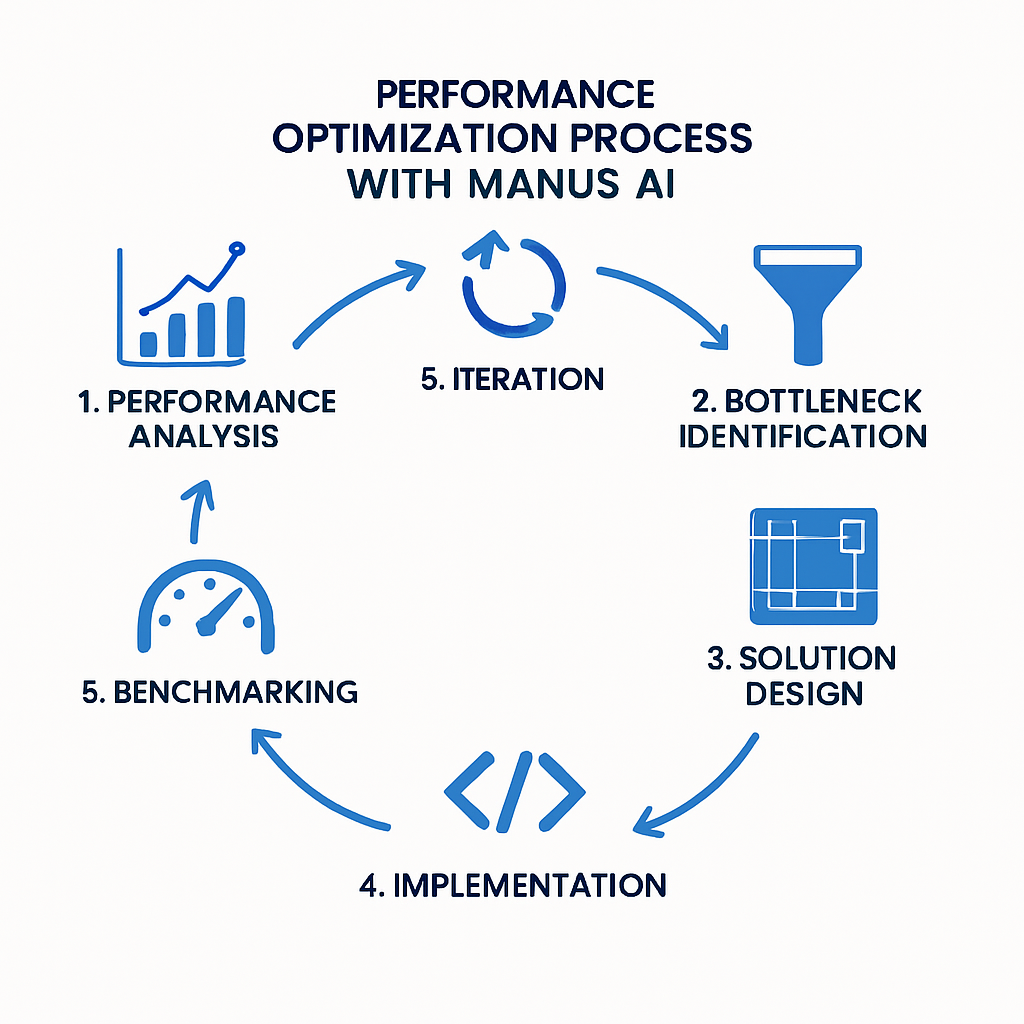

Figure 1: Performance Optimization Process

Load Testing Example:

# Example load testing script using Locust

from locust import HttpUser, task, between

class ManusAPIUser(HttpUser):

wait_time = between(1, 3) # Wait 1-3 seconds between tasks

@task(3)

def generate_content(self):

self.client.post("/api/generate", json={

"prompt": "Write a product description for a smartphone",

"max_tokens": 500,

"temperature": 0.7

}, headers={"Authorization": "Bearer ${TOKEN}"})

@task(2)

def analyze_text(self):

self.client.post("/api/analyze", json={

"text": "The new product exceeded our expectations with its innovative features.",

"analysis_type": "sentiment"

}, headers={"Authorization": "Bearer ${TOKEN}"})

@task(1)

def summarize_document(self):

self.client.post("/api/summarize", json={

"url": "https://example.com/article",

"length": "medium"

}, headers={"Authorization": "Bearer ${TOKEN}"})

# Run with: locust -f locustfile.py --host=https://api.manus.aiPerformance Monitoring

Continuous tracking of system performance:

- Real-Time Monitoring: Tracking performance metrics as they happen

- Alerting: Notifying when metrics exceed thresholds

- Trend Analysis: Identifying patterns and changes over time

- Root Cause Analysis: Determining the source of performance issues

Monitoring Stack Example:

- Metrics Collection: Prometheus

- Visualization: Grafana

- Log Management: Elasticsearch, Logstash, Kibana (ELK Stack)

- Distributed Tracing: Jaeger or Zipkin

- Alerting: Alertmanager with PagerDuty integration

Optimization Strategies

API and Request Optimization

Improving the efficiency of API interactions:

- Request Batching: Combining multiple requests into a single API call

- Pagination: Breaking large responses into manageable chunks

- Compression: Reducing the size of request and response payloads

- Request Prioritization: Handling critical requests before less important ones

Request Batching Example:

// Example of request batching in JavaScript

async function batchProcessDocuments(documents) {

// Instead of sending one request per document

// Send a batch of documents in a single request

const batchSize = 10;

const results = [];

for (let i = 0; i < documents.length; i += batchSize) {

const batch = documents.slice(i, i + batchSize);

try {

const response = await fetch('https://api.manus.ai/batch/analyze', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'Authorization': 'Bearer ' + API_KEY

},

body: JSON.stringify({

documents: batch.map(doc => ({

id: doc.id,

text: doc.content,

analysis_type: 'sentiment'

}))

})

});

const batchResults = await response.json();

results.push(...batchResults);

// Respect rate limits with a small delay between batches

if (i + batchSize < documents.length) {

await new Promise(resolve => setTimeout(resolve, 100));

}

} catch (error) {

console.error('Error processing batch:', error);

// Handle error appropriately

}

}

return results;

}Caching Strategies

Using caching to improve performance and reduce load:

- Response Caching: Storing and reusing responses for identical requests

- Partial Result Caching: Caching intermediate results for complex operations

- Cache Invalidation: Ensuring cached data remains current and accurate

- Distributed Caching: Sharing cache across multiple instances or regions

Figure 2: Multi-Level Caching Architecture

Caching Implementation Example:

# Example of response caching in Python with Redis

import redis

import json

import hashlib

from functools import wraps

# Initialize Redis client

redis_client = redis.Redis(host='localhost', port=6379, db=0)

def cache_response(expiration=3600):

"""

Decorator to cache API responses in Redis

Args:

expiration: Cache expiration time in seconds (default: 1 hour)

"""

def decorator(func):

@wraps(func)

async def wrapper(*args, **kwargs):

# Create a cache key based on function name and arguments

key_parts = [func.__name__]

key_parts.extend([str(arg) for arg in args])

key_parts.extend([f"{k}:{v}" for k, v in sorted(kwargs.items())])

# Create a hash of the key parts for a compact cache key

cache_key = hashlib.md5(":".join(key_parts).encode()).hexdigest()

# Try to get from cache

cached_result = redis_client.get(cache_key)

if cached_result:

return json.loads(cached_result)

# If not in cache, call the original function

result = await func(*args, **kwargs)

# Store in cache

redis_client.setex(

cache_key,

expiration,

json.dumps(result)

)

return result

return wrapper

return decorator

# Example usage

@cache_response(expiration=1800) # Cache for 30 minutes

async def analyze_sentiment(text):

# This would normally call the Manus AI API

# For expensive operations, caching saves time and resources

response = await manus_client.analyze(

text=text,

analysis_type='sentiment'

)

return responseResource Optimization

Efficient use of computational resources:

- Memory Management: Optimizing memory usage and preventing leaks

- CPU Optimization: Efficient algorithms and parallel processing

- I/O Optimization: Reducing disk and network operations

- Resource Pooling: Sharing and reusing expensive resources

Resource Optimization Example:

# Example of connection pooling in Python

import aiohttp

import asyncio

from contextlib import asynccontextmanager

class ManusClientPool:

def __init__(self, api_key, max_connections=10):

self.api_key = api_key

self.max_connections = max_connections

self.semaphore = asyncio.Semaphore(max_connections)

self.session = None

async def initialize(self):

"""Initialize the HTTP session pool"""

if self.session is None or self.session.closed:

self.session = aiohttp.ClientSession(

headers={"Authorization": f"Bearer {self.api_key}"}

)

async def close(self):

"""Close the HTTP session pool"""

if self.session and not self.session.closed:

await self.session.close()

@asynccontextmanager

async def acquire(self):

"""Acquire a connection from the pool"""

await self.initialize()

async with self.semaphore:

yield self.session

async def generate_content(self, prompt, **kwargs):

"""Generate content using a connection from the pool"""

async with self.acquire() as session:

async with session.post(

"https://api.manus.ai/generate",

json={"prompt": prompt, **kwargs}

) as response:

return await response.json()

async def analyze_text(self, text, analysis_type, **kwargs):

"""Analyze text using a connection from the pool"""

async with self.acquire() as session:

async with session.post(

"https://api.manus.ai/analyze",

json={"text": text, "analysis_type": analysis_type, **kwargs}

) as response:

return await response.json()

# Usage example

async def main():

client_pool = ManusClientPool(api_key="YOUR_API_KEY", max_connections=5)

try:

# Process multiple requests efficiently using the connection pool

tasks = []

for i in range(20):

if i % 2 == 0:

tasks.append(client_pool.generate_content(

f"Write a short paragraph about topic {i}",

max_tokens=200

))

else:

tasks.append(client_pool.analyze_text(

f"This is sample text {i} for analysis",

analysis_type="sentiment"

))

results = await asyncio.gather(*tasks)

print(f"Processed {len(results)} requests")

finally:

await client_pool.close()

# Run the example

asyncio.run(main())Concurrency and Parallelism

Processing multiple operations simultaneously:

- Asynchronous Processing: Non-blocking operations for improved throughput

- Parallel Processing: Distributing work across multiple processors or threads

- Task Prioritization: Ensuring critical tasks are completed first

- Load Balancing: Distributing work evenly across resources

Concurrency Example:

// Example of concurrent processing in Node.js

const axios = require('axios');

const pLimit = require('p-limit');

// Create a concurrency limit of 5 simultaneous requests

const limit = pLimit(5);

async function processDocuments(documents) {

try {

// Map each document to a limited promise

const promises = documents.map(doc => {

return limit(() => processDocument(doc));

});

// Wait for all promises to resolve

const results = await Promise.all(promises);

console.log(`Successfully processed ${results.length} documents`);

return results;

} catch (error) {

console.error('Error in batch processing:', error);

throw error;

}

}

async function processDocument(document) {

try {

// First analyze the document

const analysisResult = await axios.post('https://api.manus.ai/analyze', {

text: document.content,

analysis_type: 'comprehensive'

}, {

headers: { 'Authorization': `Bearer ${process.env.MANUS_API_KEY}` }

});

// Then generate a summary based on the analysis

const summaryResult = await axios.post('https://api.manus.ai/generate', {

prompt: `Summarize the following document, focusing on ${analysisResult.data.key_topics.join(', ')}:\n\n${document.content.substring(0, 500)}...`,

max_tokens: 200

}, {

headers: { 'Authorization': `Bearer ${process.env.MANUS_API_KEY}` }

});

return {

document_id: document.id,

analysis: analysisResult.data,

summary: summaryResult.data.content

};

} catch (error) {

console.error(`Error processing document ${document.id}:`, error.message);

return {

document_id: document.id,

error: error.message

};

}

}Scaling Strategies

Horizontal Scaling

Adding more instances to handle increased load:

- Auto-Scaling: Automatically adjusting the number of instances based on demand

- Load Balancing: Distributing requests across multiple instances

- Stateless Design: Ensuring instances can be added or removed without affecting functionality

- Session Management: Handling user sessions across multiple instances

Figure 3: Horizontal vs. Vertical Scaling

Vertical Scaling

Increasing the resources of existing instances:

- Resource Allocation: Adding more CPU, memory, or storage to existing instances

- Hardware Optimization: Using specialized hardware for specific tasks

- Instance Sizing: Selecting appropriate instance types for different workloads

- Resource Monitoring: Tracking resource usage to identify scaling needs

Distributed Architecture

Designing systems that operate across multiple locations or environments:

- Microservices: Breaking down functionality into independent services

- Service Mesh: Managing communication between distributed services

- Edge Computing: Processing data closer to where it's needed

- Multi-Region Deployment: Distributing services across geographic regions

Distributed Architecture Example:

# Example Kubernetes configuration for a distributed Manus AI deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: manus-api-gateway

namespace: manus-system

spec:

replicas: 3

selector:

matchLabels:

app: manus-api-gateway

template:

metadata:

labels:

app: manus-api-gateway

spec:

containers:

- name: api-gateway

image: manus/api-gateway:v1.2.3

ports:

- containerPort: 8080

resources:

requests:

memory: "256Mi"

cpu: "100m"

limits:

memory: "512Mi"

cpu: "500m"

livenessProbe:

httpGet:

path: /health

port: 8080

initialDelaySeconds: 30

periodSeconds: 10

readinessProbe:

httpGet:

path: /ready

port: 8080

initialDelaySeconds: 5

periodSeconds: 5

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: manus-content-generator

namespace: manus-system

spec:

replicas: 5

selector:

matchLabels:

app: manus-content-generator

template:

metadata:

labels:

app: manus-content-generator

spec:

containers:

- name: content-generator

image: manus/content-generator:v1.2.3

resources:

requests:

memory: "1Gi"

cpu: "500m"

limits:

memory: "4Gi"

cpu: "2000m"

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: manus-analyzer

namespace: manus-system

spec:

replicas: 3

selector:

matchLabels:

app: manus-analyzer

template:

metadata:

labels:

app: manus-analyzer

spec:

containers:

- name: analyzer

image: manus/analyzer:v1.2.3

resources:

requests:

memory: "512Mi"

cpu: "250m"

limits:

memory: "2Gi"

cpu: "1000m"

---

apiVersion: v1

kind: Service

metadata:

name: manus-api-gateway

namespace: manus-system

spec:

selector:

app: manus-api-gateway

ports:

- port: 80

targetPort: 8080

type: LoadBalancer

---

apiVersion: v1

kind: Service

metadata:

name: manus-content-generator

namespace: manus-system

spec:

selector:

app: manus-content-generator

ports:

- port: 8080

targetPort: 8080

type: ClusterIP

---

apiVersion: v1

kind: Service

metadata:

name: manus-analyzer

namespace: manus-system

spec:

selector:

app: manus-analyzer

ports:

- port: 8080

targetPort: 8080

type: ClusterIP

---

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: manus-content-generator-hpa

namespace: manus-system

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: manus-content-generator

minReplicas: 3

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 70Advanced Optimization Techniques

Predictive Scaling

Anticipating resource needs before they occur:

- Usage Pattern Analysis: Identifying recurring patterns in system usage

- Predictive Models: Using machine learning to forecast resource needs

- Proactive Scaling: Adjusting resources before demand increases

- Scheduled Scaling: Adjusting resources based on known busy periods

Cost Optimization

Balancing performance with resource costs:

- Resource Right-Sizing: Matching resource allocation to actual needs

- Spot Instances: Using lower-cost, interruptible resources for non-critical tasks

- Reserved Capacity: Pre-purchasing capacity for predictable workloads

- Cost Monitoring: Tracking and optimizing resource expenditure

Cost Optimization Example:

# Example Terraform configuration for cost-optimized AWS deployment

module "manus_api_service" {

source = "./modules/ecs-service"

name = "manus-api"

cluster_id = aws_ecs_cluster.main.id

# Use a mix of instance types for cost optimization

instance_types = {

on_demand = {

type = "t3.medium"

count = 2 # Minimum guaranteed capacity

weight = 1

}

spot = {

types = ["t3.medium", "t3a.medium", "t2.medium"]

count = 8 # Maximum additional capacity

weight = 3

}

}

# Auto-scaling based on demand

auto_scaling = {

min_capacity = 2

max_capacity = 10

target_cpu_utilization = 70

scale_in_cooldown = 300

scale_out_cooldown = 60

}

# Schedule-based scaling for known peak times

scheduled_scaling = [

{

name = "business-hours"

schedule = "cron(0 8 ? * MON-FRI *)" # 8 AM weekdays

min_capacity = 4

max_capacity = 10

},

{

name = "evening-scale-down"

schedule = "cron(0 18 ? * MON-FRI *)" # 6 PM weekdays

min_capacity = 2

max_capacity = 6

},

{

name = "weekend-scale-down"

schedule = "cron(0 0 ? * SAT-SUN *)" # Midnight on weekends

min_capacity = 2

max_capacity = 4

}

]

# Lifecycle policy to terminate instances based on cost

termination_policies = [

"OldestLaunchTemplate",

"OldestLaunchConfiguration",

"ClosestToNextInstanceHour"

]

}Performance Tuning for Specific Use Cases

Optimizing for particular scenarios:

- High-Volume Processing: Handling large numbers of requests efficiently

- Low-Latency Requirements: Minimizing response times for time-sensitive applications

- Batch Processing: Efficiently handling large batches of work

- Real-Time Processing: Ensuring immediate processing of incoming data

Practical Exercise: Performance Optimization Plan

Develop a performance optimization plan for a Manus AI implementation:

- Select a specific use case for Manus AI (e.g., content generation, data analysis)

- Identify potential performance bottlenecks and challenges

- Design a monitoring and benchmarking strategy

- Outline specific optimization techniques for different components

- Create a scaling plan to handle increasing demand

Knowledge Check: Performance Optimization

Question 1

Which of the following is NOT a key performance metric for Manus AI systems?

Code complexity is not a key performance metric for Manus AI systems. While code complexity can affect maintainability and development efficiency, it is not directly related to runtime performance. The key performance metrics include response time (how long it takes to complete a request), throughput (number of requests processed per unit of time), resource utilization, and error rate.

Question 2

Which caching strategy involves storing and reusing intermediate results during complex operations?

Partial result caching involves storing and reusing intermediate results during complex operations. This strategy is particularly useful when operations involve multiple steps or calculations, allowing the system to avoid repeating work that has already been done. For example, in a multi-stage analysis pipeline, the results of early stages can be cached and reused for different final outputs.

Question 3

Which scaling approach involves adding more CPU, memory, or storage to existing instances?

Vertical scaling involves adding more CPU, memory, or storage to existing instances. This approach increases the capacity of individual servers rather than adding more servers (which would be horizontal scaling). Vertical scaling is often simpler to implement but has upper limits based on the maximum capacity of available hardware.

Quiz Complete!

You've completed the quiz on Performance Optimization for Manus AI.

You've earned your progress badge for this lesson!